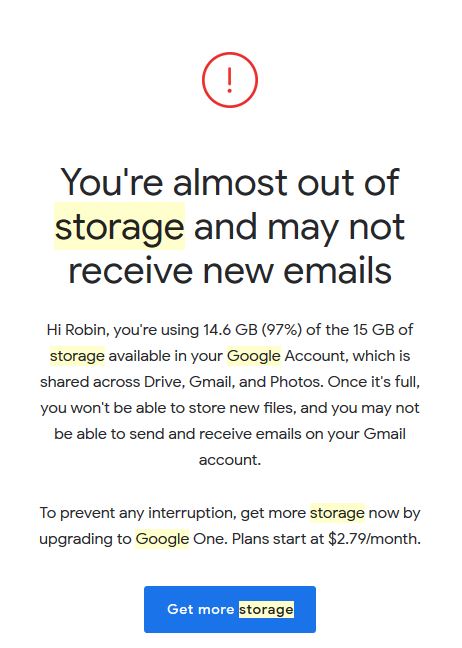

Like billions of people, I have happily used Google search, Gmail and Docs for years, marvelling at how they could provide all that free storage space. However, the fun recently came to an end when I started getting this message from Google:

The message doesn’t suggest deleting things to clear space, let alone provide any helpful tips on how to do so. The message is clearly aimed at scaring people consumed by FOMO (the fear of missing out) into paying for storage.

I’m sure Google told me about the 15GB limit when I signed up years ago, but I had long since forgotten, as I’m sure most people have. So, now we have the choice of deleting lots of things, moving them to physical hard drives or finding a free storage service (good luck with the last one).

I tried deleting things but, after wiping out my biggest files, I still had 14GB of stuff and couldn’t figure out how all my little files could add up to all that. These issues, however, pale compared to the larger one: that Google is asking us to pay to store our content from which they already make huge profits partly by enabling things like hate speech and disinformation.

As I explained in my rabble article COVID-19 could mean we lose and surveillance capitalists win — again, Google and Facebook make lots of money off hate speech and disinformation like conspiracy theories because they generate lots of engagement. The more engagement, the more they can charge for ads.

The excerpts below from Roger McNamee’s booked, Zucked: Waking up to the Facebook catastrophe, explains why this matters.

Here’s what McNamee said at the May 28, 2019 hearing of Canada’s federal Standing Committee on Access to Information, Privacy and Ethics:

“For Google and Facebook, the business is behavioural prediction. They build a high resolution data avatar of every consumer – a voodoo doll, if you will. They gather a tiny amount of data from user posts and queries but the vast majority of their data comes from surveillance: web tracking, scanning emails and documents, data from apps and third parties and ambient surveillance from products like Alexa, Google Assistant…and Pokemon Go!. Google and Facebook use data voodoo dolls to provide their customers – who are marketers – with perfect information about every consumer. They use the same data to manipulate consumer choices. Just as in China, behavioural manipulation is the goal. The algorithms of Google and Facebook are tuned to keep users on site and active, preferably by pressing emotional buttons that reveal each users true self. For most users, this means content that provokes fear or outrage. Hate speech, disinformation and conspiracy theories are catnip for these algorithms.”

In Zucked, McNamee argues that what people do, or influence others to do, when they get access to our data voodoo dolls, legally or illegally, can be devastating:

“The vast majority of the data in your voodoo doll got into the hands of internet platforms without your participation or permission. It bears little relation to the services you value. The harm it causes is generally to other people, which means that other people’s data can harm you. That is what happened to the victims in El Paso, Christchurch, and so many other places. Do we want the power of roughly three billion data voodoo dolls to be available to anyone willing to pay for access? Would it not be better to prevent antivaxxers from leveraging Google’s predictions about pregnancy to indoctrinate unsuspecting mothers-to-be with their conspiracy theory, placing many people at risk of infectious disease? The same question needs to be asked about climate change denial and white supremacy, both of which are amplified by internet platforms. How about election interference and voter suppression? Internet platforms did not create these ills, but they have magnified them. Is it really acceptable for corporations to profit from the algorithmic amplification of hate speech, disinformation, and conspiracy theories? Do we want to reward corporations for damaging society?”

Do you want to pay Google $2.79 a month to damage society? If we don’t, what can we do?

One thing would be to have massive Global Google Deletion Days (with the cool #G2D2 hashtag) where millions of users simultaneously delete big chunks of their data to avoid going over the 15GB limit. This would deprive Google of the extra revenue they’d get from people paying for Google One cloud storage, and the revenue they were making off the deleted content.

Start going through your stuff…and enjoy finding and sharing some gems from your past.